Re: Render to Framebuffer

Posted: Sat Jul 30, 2022 9:45 pm

Yes, it seems to work the same with RGBA16F as with just RGBA.

For information and discussion about Oolite.

https://bb.oolite.space/

Code: Select all

out vec4 out_color;

in VsOut

{

vec2 texture_coordinate;

} vs_out;

uniform sampler2D image;

uniform float uTime;

vec3 ACESFilm(vec3 color)

{

// taken from https://www.shadertoy.com/view/XsGfWV This is based on the actual

// ACES sources and is effectively the glsl translation of Stephen Hill's fit

// (https://github.com/TheRealMJP/BakingLab/blob/master/BakingLab/ACES.hlsl)

mat3 m1 = mat3(

0.59719, 0.07600, 0.02840,

0.35458, 0.90834, 0.13383,

0.04823, 0.01566, 0.83777

);

mat3 m2 = mat3(

1.60475, -0.10208, -0.00327,

-0.53108, 1.10813, -0.07276,

-0.07367, -0.00605, 1.07602

);

// prevents some ACES artifacts, especially bright blues shifting towards purple

// see https://community.acescentral.com/t/colour-artefacts-or-breakup-using-aces/520/48

mat3 highlightsFixLMT = mat3(

0.9404372683, -0.0183068787, 0.0778696104,

0.0083786969, 0.8286599939, 0.1629613092,

0.0005471261, -0.0008833746, 1.0003362486

);

vec3 v = m1 * color * highlightsFixLMT;

vec3 a = v * (v + 0.0245786) - 0.000090537;

vec3 b = v * (0.983729 * v + 0.4329510) + 0.238081;

return clamp(m2 * (a / b), 0.0, 1.0);

}

vec2 crt_coords(vec2 uv, float bend)

{

uv -= 0.5;

uv *= 2.;

uv.x *= 1. + pow(abs(uv.y)/bend, 2.);

uv.y *= 1. + pow(abs(uv.x)/bend, 2.);

uv /= 2.02;

return uv + .5;

}

float vignette(vec2 uv, float size, float smoothness, float edgeRounding)

{

uv -= .5;

uv *= size;

float amount = sqrt(pow(abs(uv.x), edgeRounding) + pow(abs(uv.y), edgeRounding));

amount = 1. - amount;

return smoothstep(0., smoothness, amount);

}

float scanline(vec2 uv, float lines, float speed)

{

return sin(uv.y * lines + uTime * speed);

}

float random(vec2 uv)

{

return fract(sin(dot(uv, vec2(15.5151, 42.2561))) * 12341.14122 * sin(uTime * 0.03));

}

float noise(vec2 uv)

{

vec2 i = floor(uv);

vec2 f = fract(uv);

float a = random(i);

float b = random(i + vec2(1.,0.));

float c = random(i + vec2(0., 1.));

float d = random(i + vec2(1.));

vec2 u = smoothstep(0., 1., f);

return mix(a,b, u.x) + (c - a) * u.y * (1. - u.x) + (d - b) * u.x * u.y;

}

vec4 CRT()

{

vec2 uv = vs_out.texture_coordinate;

vec2 crt_uv = crt_coords(uv, 8.);

float s1 = scanline(uv, 1500., -10.);

float s2 = scanline(uv, 10., -3.);

vec4 col = texture(image, crt_uv);

col.r = texture(image, crt_uv + vec2(0., 0.0025)).r;

col.g = texture(image, crt_uv).g;

col.b = texture(image, crt_uv + vec2(0., -0.0025)).b;

col.a = texture(image, crt_uv).a;

col = mix(col, vec4(s1 + s2), 0.05);

col = mix(col, vec4(noise(uv * 500.)), 0.05) * vignette(uv, 1.9, .6, 8.);

return col;

}

vec4 grayscale(vec4 col)

{

vec3 luma = vec3(0.2126, 0.7152, 0.0722);

col.rgb = vec3(dot(col.rgb, luma));

return col;

}

float hash(float n)

{

return fract(sin(n)*43758.5453123);

}

vec4 nightVision()

{

vec2 p = vs_out.texture_coordinate;

vec2 u = p * 2. - 1.;

vec2 n = u;

vec3 c = texture(image, p).xyz;

// flicker, grain, vignette, fade in

c += sin(hash(uTime)) * 0.01;

c += hash((hash(n.x) + n.y) * uTime) * 0.25;

c *= smoothstep(length(n * n * n * vec2(0.075, 0.4)), 1.0, 0.4);

c *= smoothstep(0.001, 3.5, uTime) * 1.5;

c = grayscale(vec4(c, 1.0)).rgb * vec3(0.2, 1.5 - hash(uTime) * 0.1,0.4);

return vec4(c, 1.0);

}

void main()

{

// uncomment only one of those

out_color = texture(image, vs_out.texture_coordinate); // original - no fx

//out_color = CRT();

//out_color = grayscale(out_color);

//out_color = nightVision();

// exposure

out_color *= 1.0;

// tone mapping and gamma correction

out_color.rgb = ACESFilm(out_color.rgb);

out_color.rgb = pow(out_color.rgb, vec3(1.0/2.2));

}

Code: Select all

// fixes transparency issue for some reason

// got it here: https://forum.openframeworks.cc/t/weird-problem-rendering-semi-transparent-image-to-fbo/2215/17

glEnable(GL_BLEND);

glBlendFuncSeparate(GL_ONE, GL_ZERO, GL_ONE, GL_ONE_MINUS_SRC_ALPHA);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUseProgram([textureProgram program]);

glUniform1i(glGetUniformLocation([textureProgram program], "image"), 0);

glUniform1f(glGetUniformLocation([textureProgram program], "uTime"), [self getTime]); // <----------------- It's this line

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, targetTextureID);

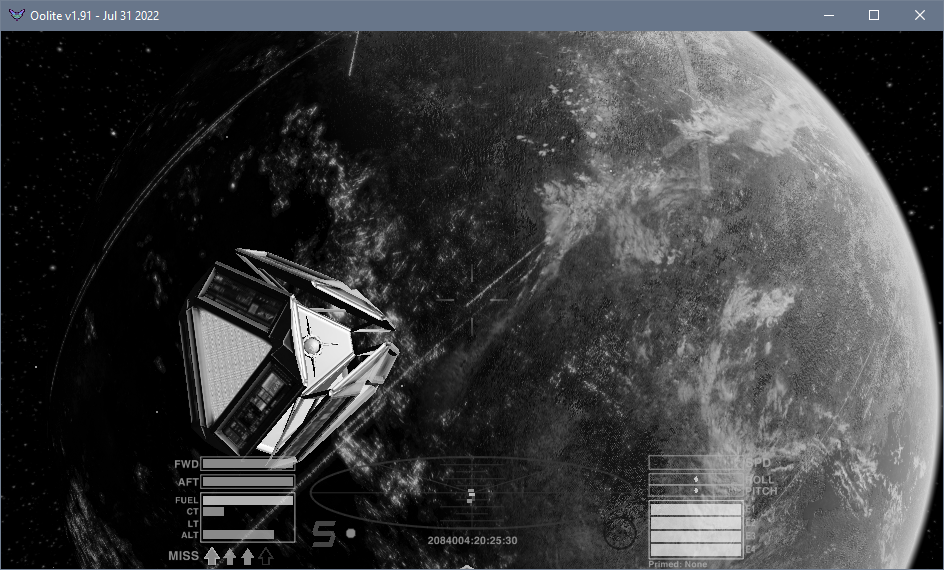

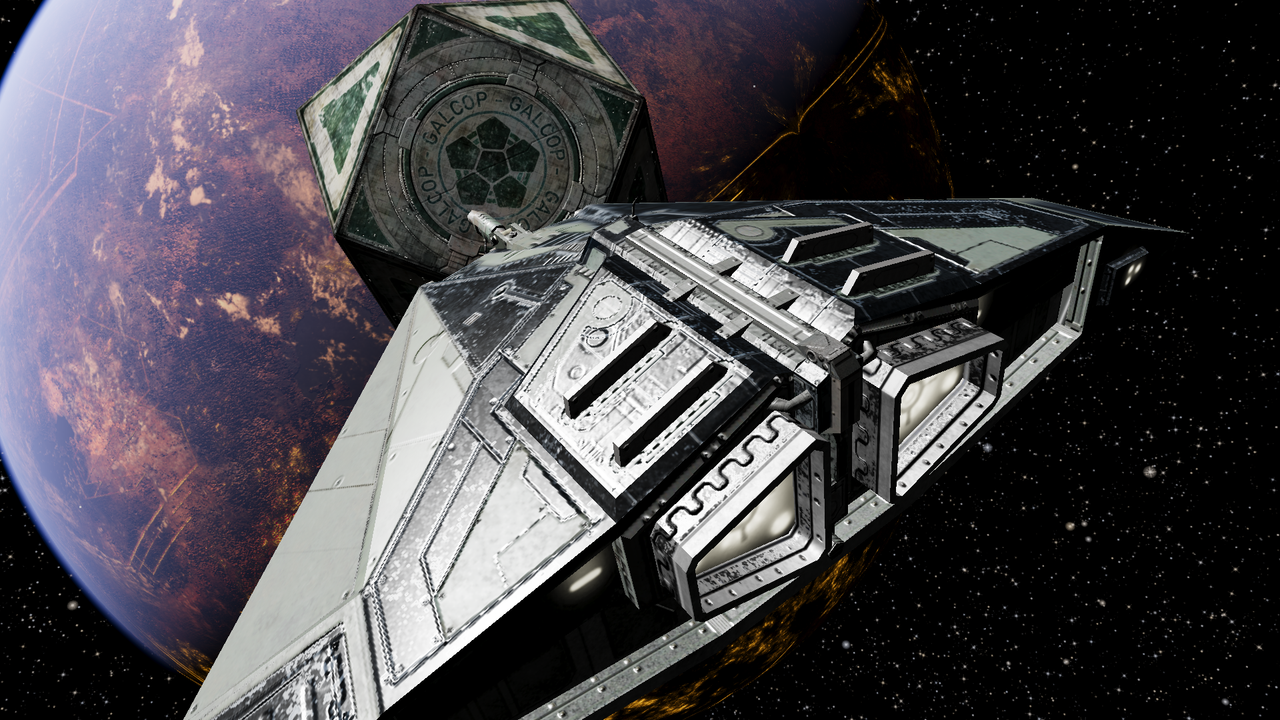

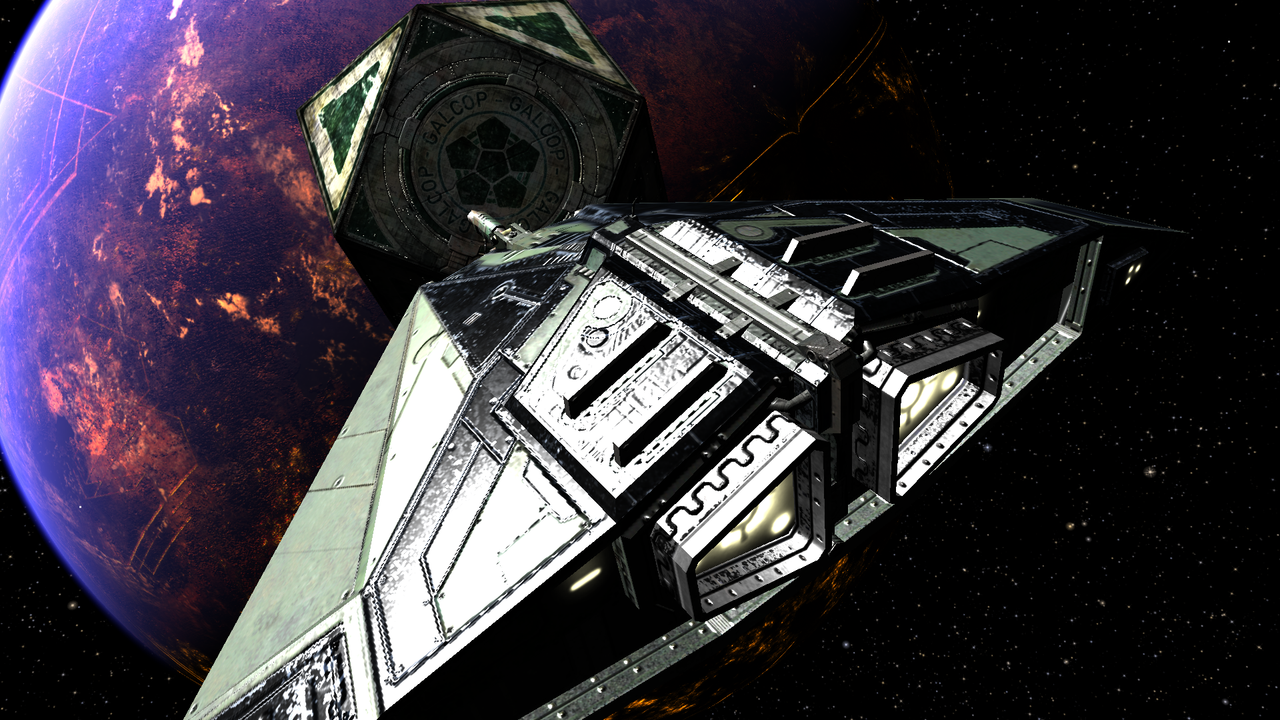

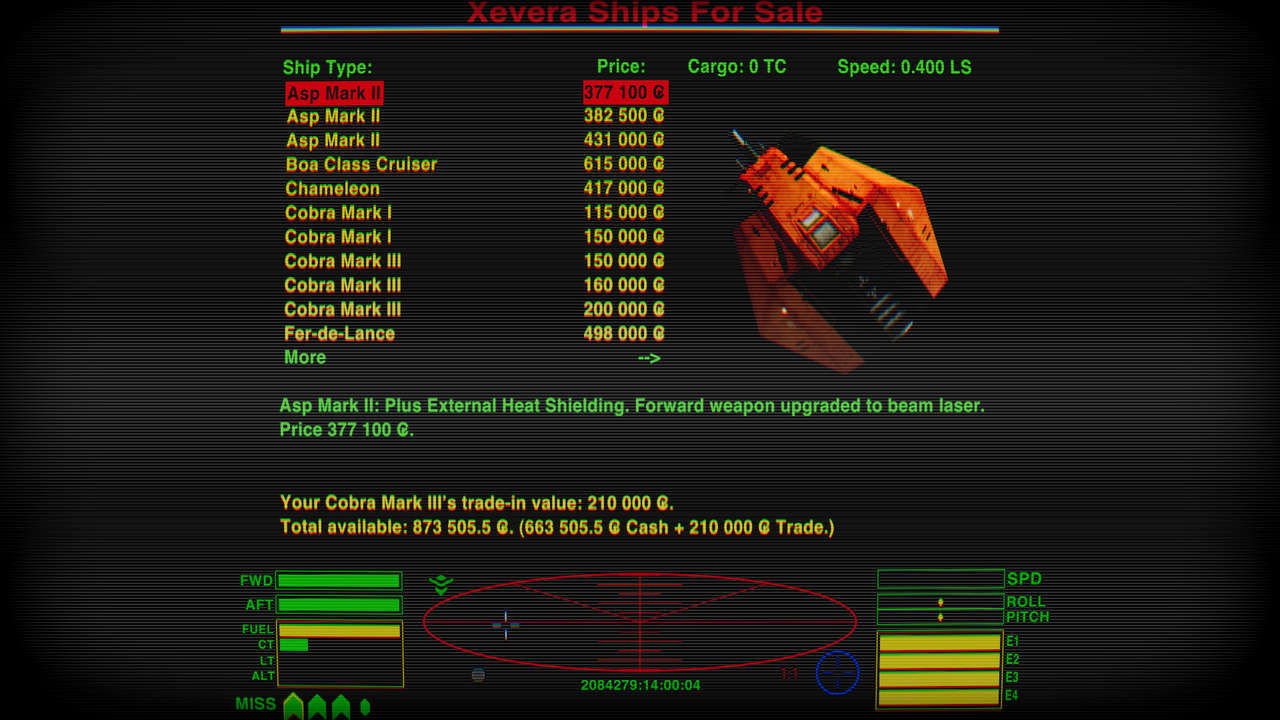

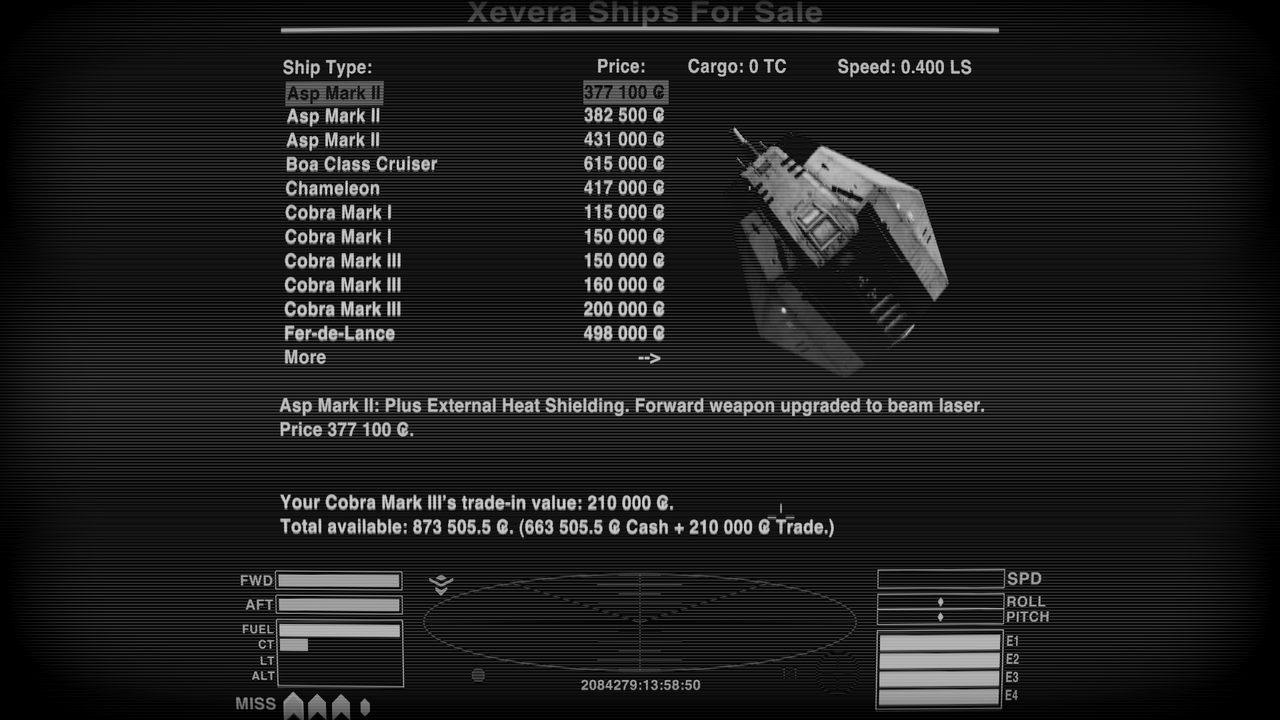

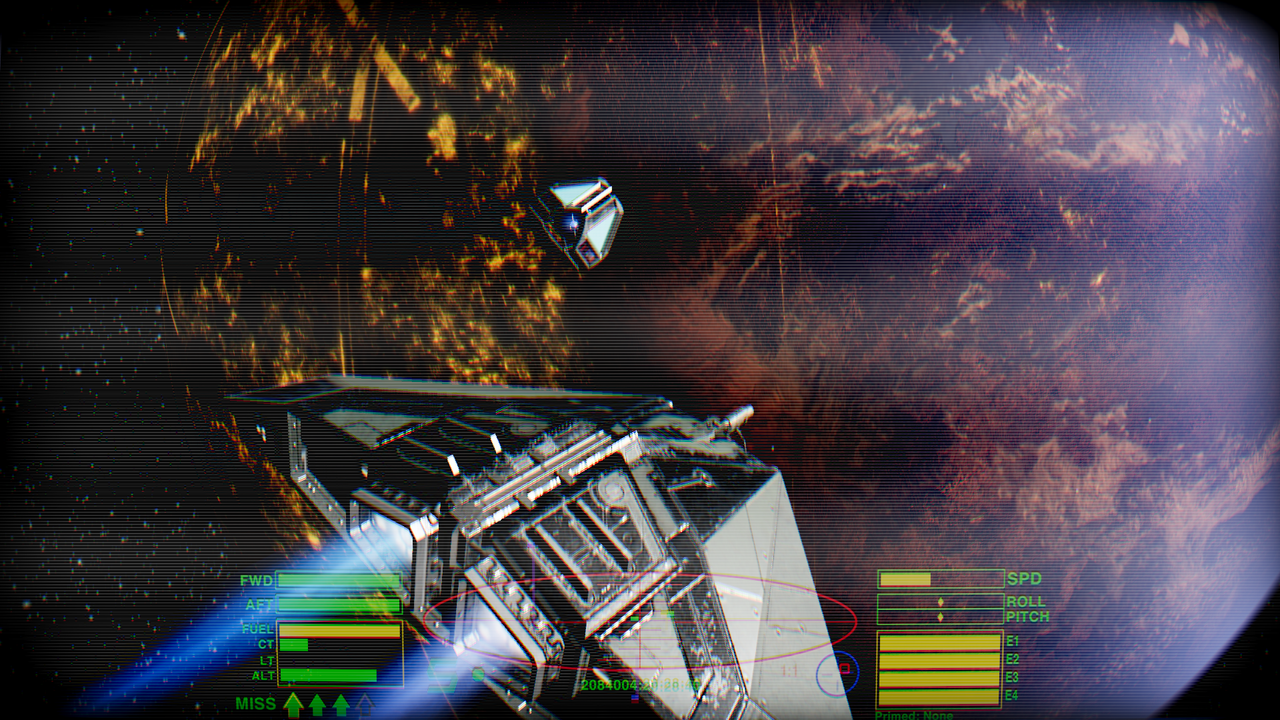

It is a post-processing render pass. It allows operations on the totality of the screen's pixels in one go. What rendermonkey was doing for the glow effect was most likely a bloom operation, which is totally achievable now that we can render our scene to a frame buffer. We are not quite there yet, as there are further code changes that are required to reach that destination and a couple bugs to squash, but let's say we are at around 95% of the way. The hardest part was tsoj's work.Griff wrote: ↑Tue Aug 02, 2022 10:37 amAm i totally misunderstanding what's possible now, but is this the same as a render pass? i remember some of the example shader effects in AMD's rendermonkey used a second pass for some of their effects - there was an evil skull example whose eyes glowed when it looked at you, it was using a second pass to overlay a "bill boarded"graphic similar to oolites built in 'flasher' object to achieve the effect, looked cool, always thought something similar could be added to the ship engines

void main() function you can switch between the current post-processing effects available. To use a post-processing effect, just uncomment its corresponding line by removing the '//' at the beginning and ensure that only one line is uncommented each time and all others are disabled (i.e. commented out, add '//' without quotes at the start of the line). In the future, when render to framebuffer has been fully sorted out, we can maybe consider looking into ways for switching the effects from within the game.Code: Select all

[startup.exception]: ***** Unhandled exception during startup: NSInvalidArgumentException (Tried to add nil value for key 'key_roll_left' to dictionary).