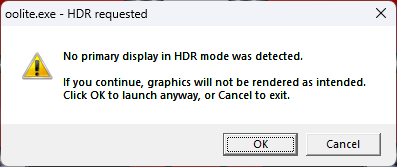

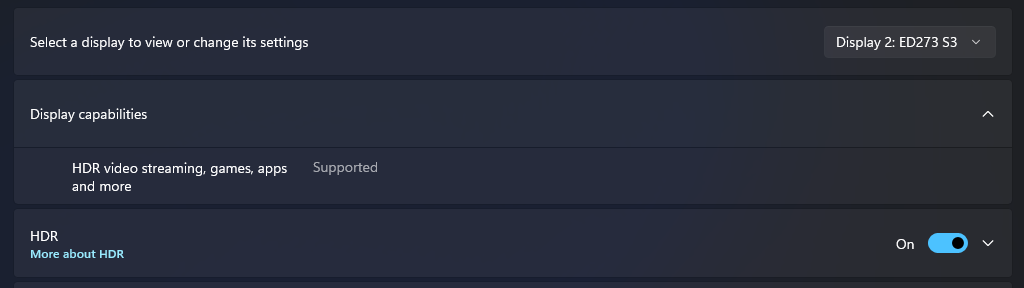

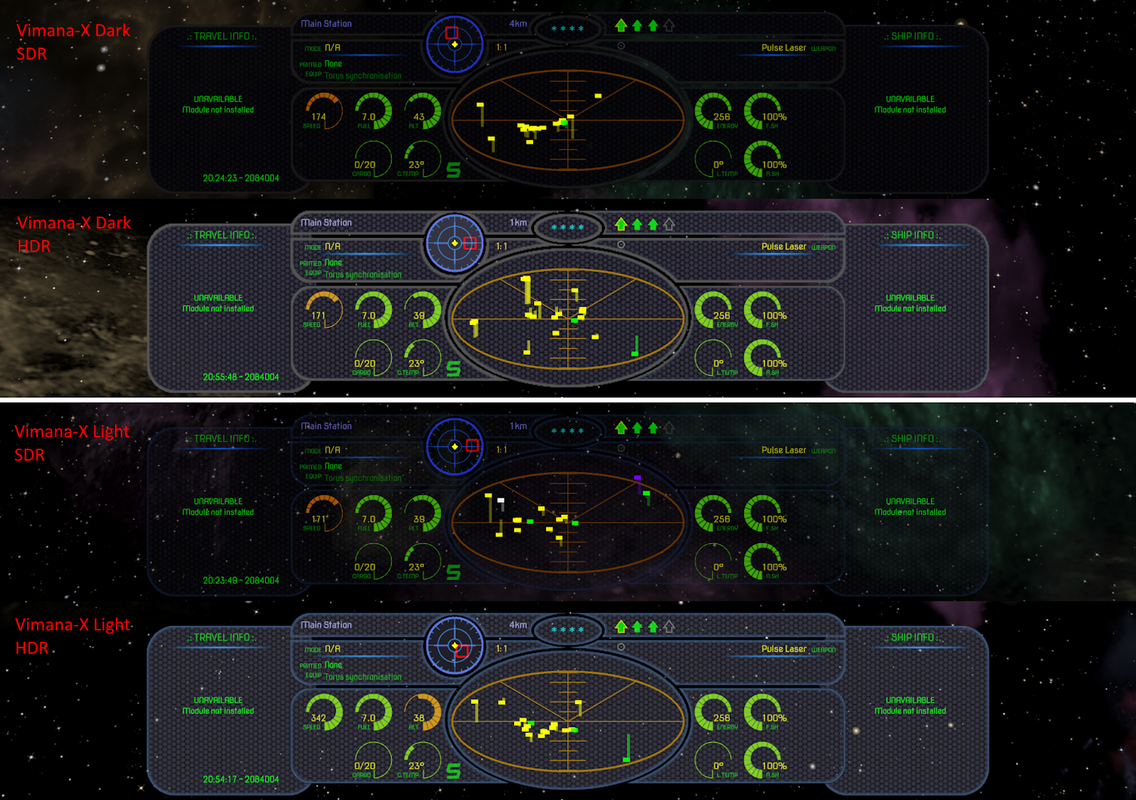

OK, now you've made me go back and have a good look at it again. So here is what is happening with color saturation and HDR vs SDR in Oolite.

I downloaded a screen test pattern image to use as basis for color accuracy tests. This will be our reference test image:

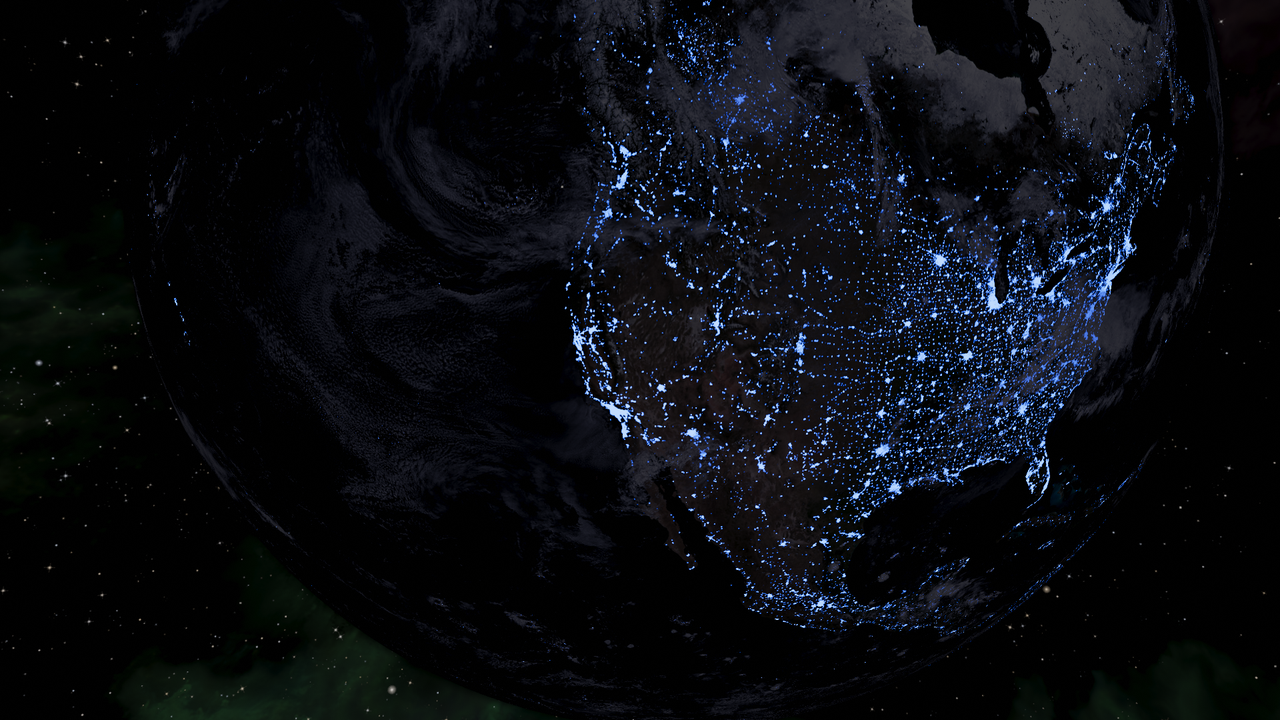

I then run a mission screen with this image as background in SDR. This is the result:

And as you can see, the accuracy of the image reproduction in SDR is not great. There are noticeable differences and all are justifiable and due to the ACES tone mapper we use, but maybe also due to some srgb/gamma 2.2 mismatch, which is beyond the scope of this test to explain here. So anyway, we know that the SDR image is lower saturation than reference and is a bit darker too.

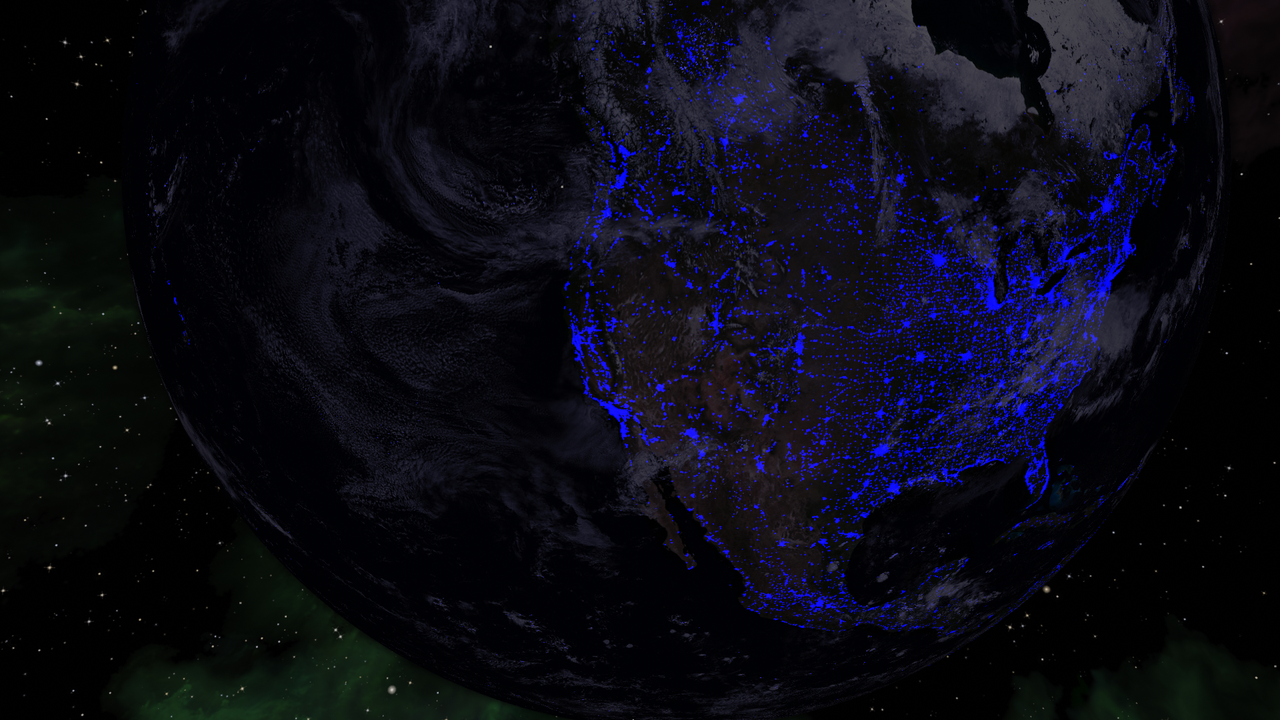

Next, with the current Oolite's HDR saturation settings, I've run the same mission screen in HDR. The result, which should be viewed in HDR and on a site that can interpret HDR pngs like

https://upscale-compare.lebaux.co/ or an HDR PNG capable viewer like SKIV, has been uploaded here:

https://drive.google.com/file/d/1m_AMnJ ... sp=sharing

You will find that the result is much, much closer to the original reference image. There are some slight differences in brightness but the color saturation is quite spot on. However, because the SDR in-game image is not very accurate, this HDR test image here looks like its off when you compare it to SDR, when in reality it is the more correct one.

So what can we do about it? I am thinking that if we sacrifice some color saturation accuracy, then we could make the HDR image get closer to the SDR one (because at the end of the day what we have learned to see in the game are SDR tone mapped images) and to achieve this we can adjust a bit the SATURATION_ADJUSTMENT constant in the oolite-fnal-hdr.fragment shader from its current (and more correct) 0.825 value to 0.725. This constant is used to make adjustments to color saturation in HDR to compensate for the different tone mappers used by the SDR and HDR shaders, which are both ACES, but different implementations.

With SATURATION_ADJUSTMENT lowered just a little bit to 0.725, the result is now this:

https://drive.google.com/file/d/1Pe8Xef ... sp=sharing

This is closer to the SDR image generated in game - still not quite the same though. However, this is when we compare the test images. In-game, the differences in rendered scenes are practically absent and I think that maybe the new 0.725 value should be the new default. Effects like lasers and hyperjumps still spill into DCI-P3/BT-2020 which is good in my book.

Simba, please have a look and let me know your opinion. I'd be interested to hear your thoughts.